Apple has silently axed all mentions of CSAM from its Child Safety webpage amid the controversy it ignited. This could indicate that Apple is reconsidering its plan to detect child sexual abuse images on iPhones and iPads.

Amid harsh criticism, Apple decided to delay the rollout until later this year. It rolled out Communication Safety features for Messages earlier this week, just before iOS 15.2 debuted.

Explaining the delayed rollout on its Child Safety page, Apple said it took into account “feedback from customers, advocacy groups, researchers and others… We have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.”

The statement is now gone, suggesting that Apple could have shelved the plan to implement Child Safety features for good.

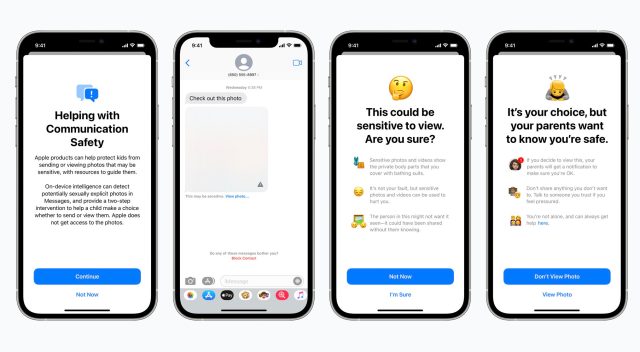

In August, the iPhone maker announced a new suite of safety features called Communication Safety. One of its features entailed scanning every child’s iCloud Photos library for Child Sexual Abuse Material (CSAM). The feature would warn the children and their parents when receiving or sending explicit material. CSAM guidance for Siri and Search were identified as well.

After the changes were announced, they drew criticism from eminent privacy advocates and organizations, including Edward Snowden, the Electronic Frontier Foundation (EFF), Facebook’s former chief of security, university researchers, and even Apple employees.

The complaints shared a common thread highlighting that Apple’s method to detect CSAM was a dangerous technology comparable to surveillance. People also expressed concerns that Apple could be convinced into scanning for other content that suited political agendas. Moreover, the method used to identify CSAM was found to be ineffective.

Apple’s initial boilerplate move reassured the masses by releasing detailed information, FAQs, interviews with company executives, and other material. Eventually, the release was delayed until iOS 15.2 was released. Now, it appears that Apple has turned around and decided to halt the rollout for now.

What do you think of this development? What could have happened if Apple rolled out the feature anyway? Tell us in the comments below.

Update

Apple spokesperson Shane Bauer told The Verge that although mentions of CSAM detection had been removed from the website, Apple’s plans for CSAM detection have not changed since September. This means that the feature is still being developed for release in the future.

In September, the iPhone maker said, “Based on feedback from customers, advocacy groups, researchers, and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.”

[Via MacRumors]